Aside from a minimal amount of standard metadata, video is an opaque medium — an informational 'black box' where the data lacks schema, context, or structure.

It may not always be so — already, mobile-oriented cameras such as Apple's TrueDepth and Samsung's DepthVision can capture depth information and stream it directly into computer vision applications, for the development of augmented reality frameworks, environmental research, and many other possible uses. For example, in the past few years we’ve seen a steadily growing adoption of computer vision applications for retail.

Later generations of the most advanced mobile capture devices may also feature live semantic segmentation, with complex descriptions added directly to the footage metadata via post-processing image-to-text interpretation, running on a new wave of mobile neural network chips. Standard video footage of the near future may even feature granular and updating geolocation data and the ability to recognize dangerous or violent situations, and trigger appropriate local actions on the device.

However, besides privacy concerns that may impede the development of AI-enabled video capture systems, upgrading to them would remain prohibitively expensive in the long term. Therefore, the efforts of academic and corporate computer vision researchers over the last 5-6 years have centered on extracting meaningful data from 'dumb' video, with an emphasis on optimized and lightweight frameworks for automated data collection and object detection in video footage.

This process has been accelerated by the increasing availability of market-leading free and open-source software (FOSS), resulting in a slew of popular and effective platform-agnostic modules, libraries and repositories. Many of these FOSS solutions are also implemented by major SaaS/MLaaS providers, whose offerings can either be integrated into your project in the long term or used at the ideation stage for rapid prototyping.

The challenges of video object detection

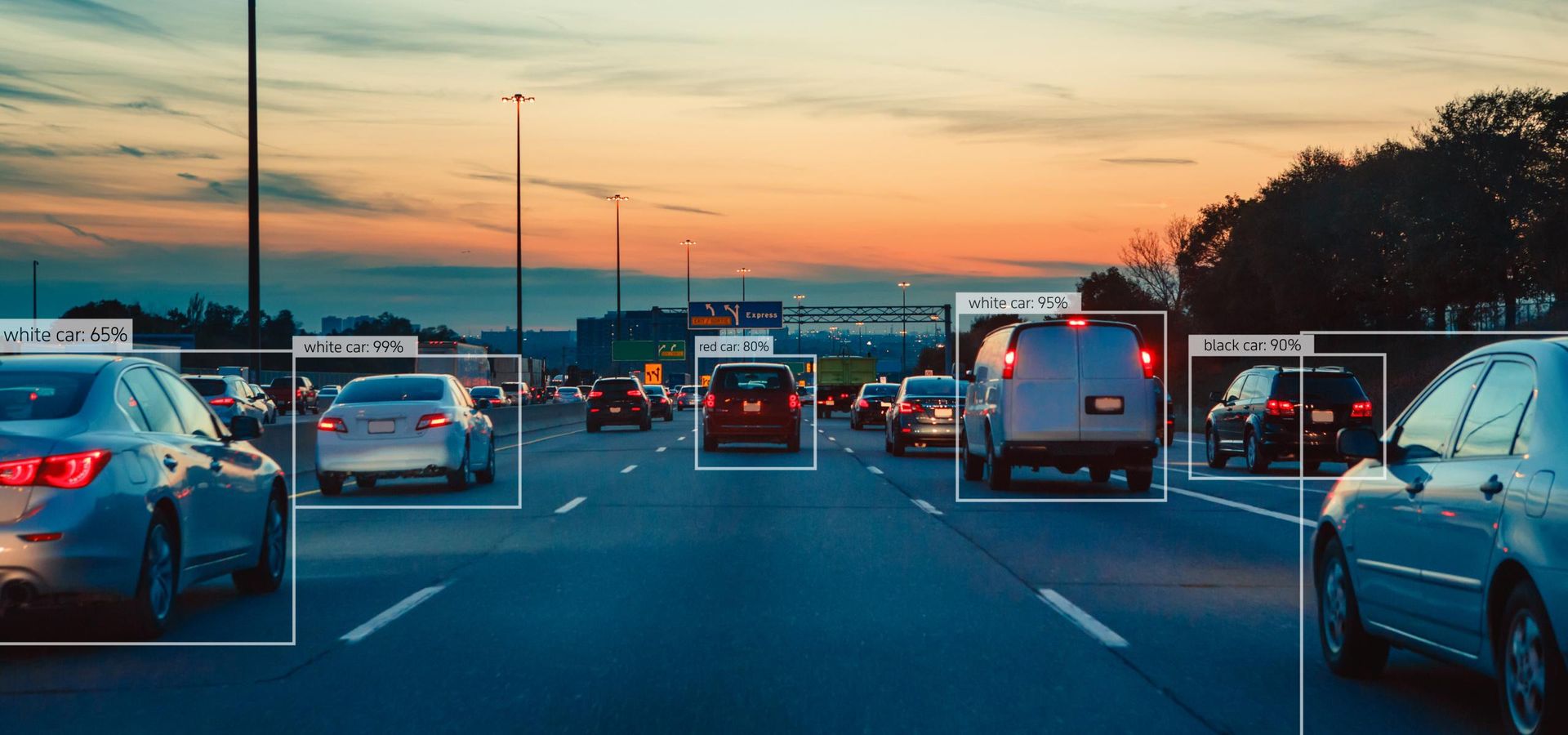

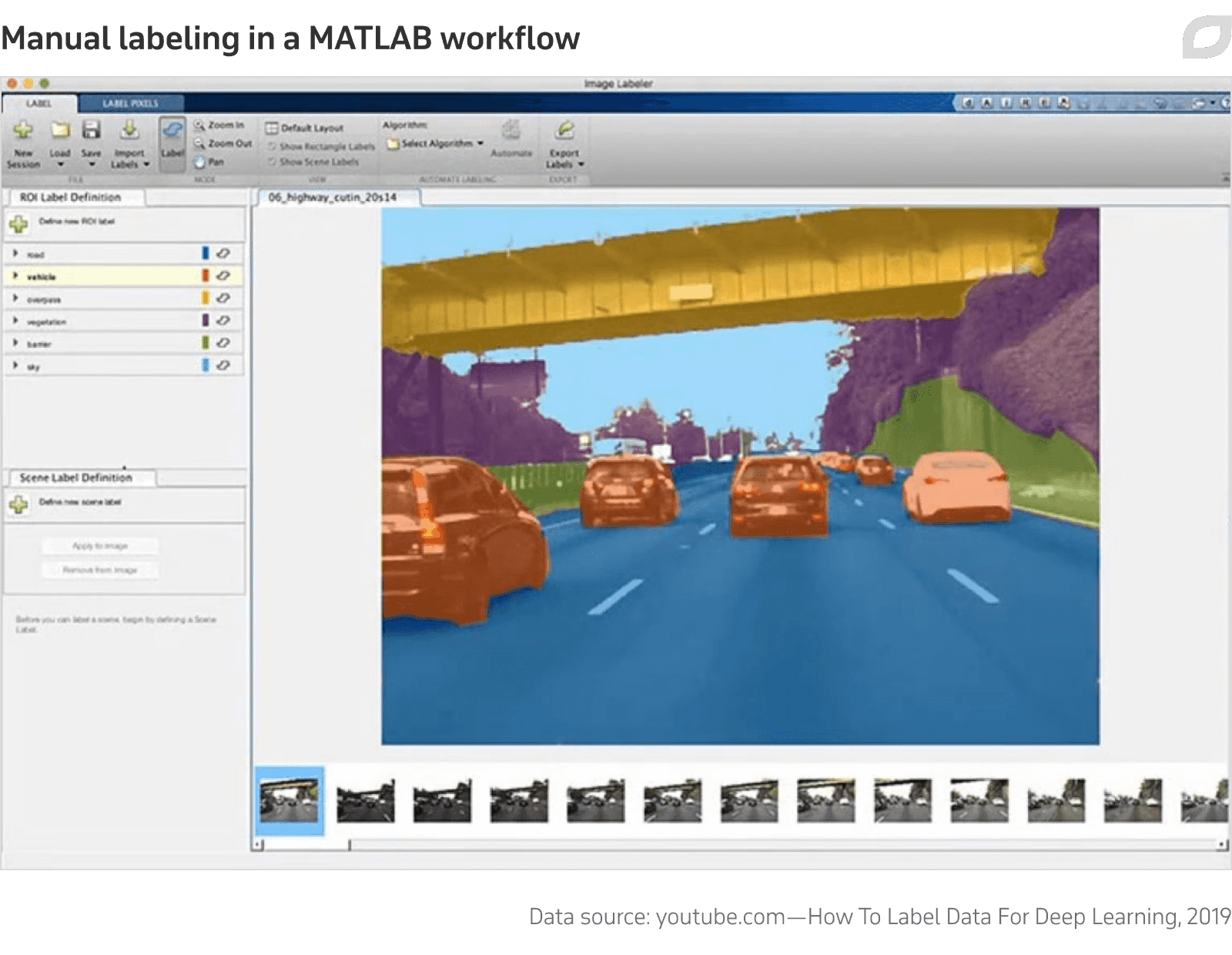

Object detection and automated visual inspection requires the training of machine learning models, such as recurrent neural networks (RNNs) and convolutional neural networks (CNNs), on images where objects have been manually annotated and associated with a high-level concept (such as 'face', 'horse' or 'building').

After training on the data, a machine learning system can infer the existence of similar objects in new data, within certain parameters of tolerance, assuming that the data was not over-fitted through supervised or unsupervised learning, poor data selection, inappropriate learning rate schedules, or other factors that can make an algorithm ineffective on 'unseen' input (i.e. data that is similar to but not identical to the data that the model was trained on).

Tackling occlusion, reacquisition and motion blur

One approach to video object detection is to split a video into its constituent frames and apply an image recognition algorithm to each frame. However, this jettisons all the potential benefits of inferring temporal information from the video.

Consider, for example, the challenge of occlusion and reacquisition: if a video object recognition framework identifies a man who is then briefly obscured by a passing pedestrian, the system will need a temporal perspective in order to understand that it has 'lost' the subject, and prioritize reacquisition.

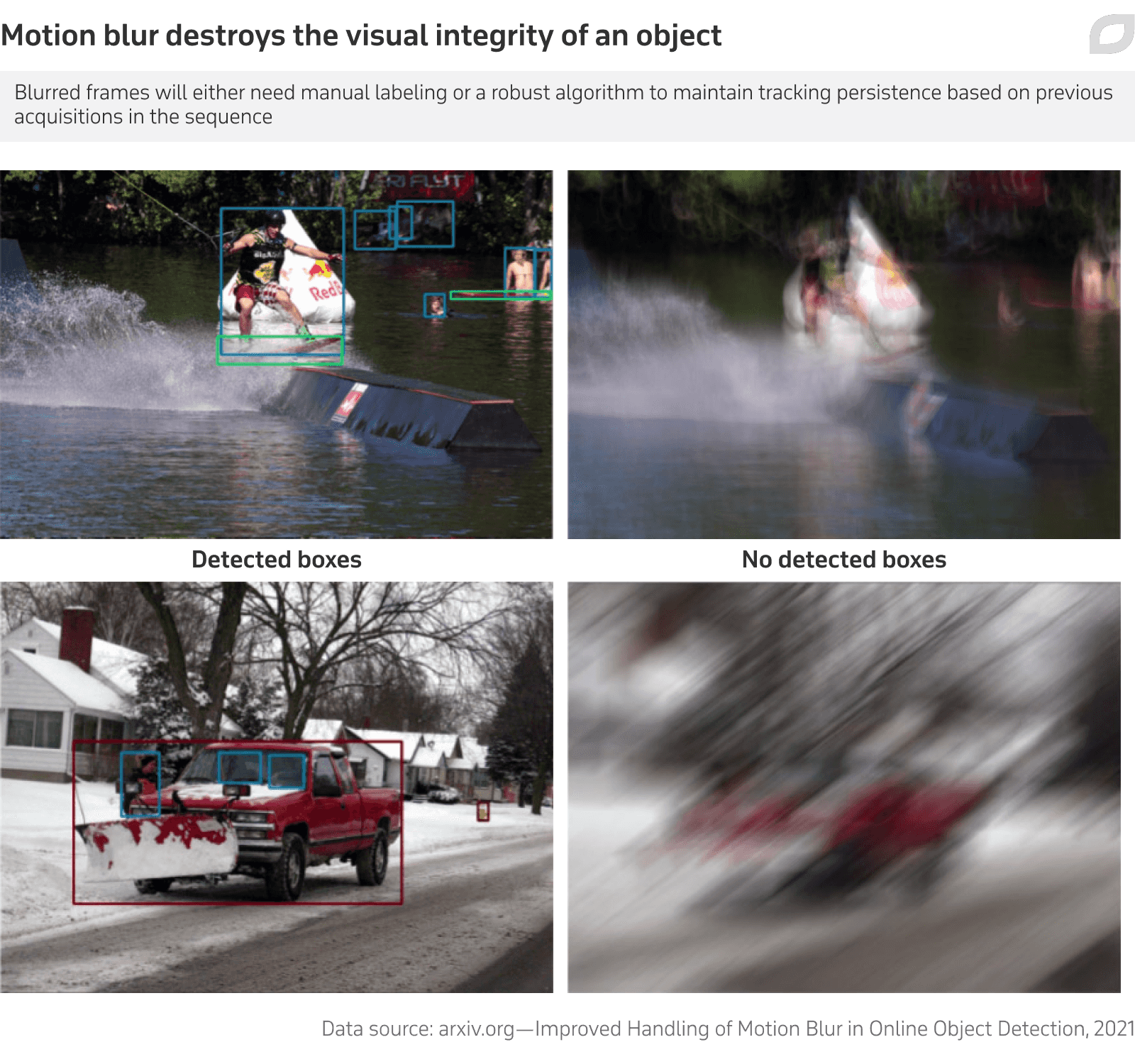

A subject can be lost not only because of occlusion, but due to motion blur, in cases where either camera movement or subject movement (or both) causes enough disruption to the frame that features become streaked or out of focus, and beyond the ability of the recognition framework to identify.

If the system is just parsing frames, no such understanding is possible, because each frame is treated as a complete (and closed) episode.

Besides this consideration, it costs a video object detection framework less in computational terms to identify and track a subject than it does to repeatedly register the same object on a per-frame basis, since from frame #2 onwards, the system knows roughly what it is looking for.

Offline video object detection

Earlier approaches to temporal coherence relied on post-processing identification, where the user must await calculation, and where the system receives enough processing time (and access to generous offline resources) to achieve a high level of accuracy.

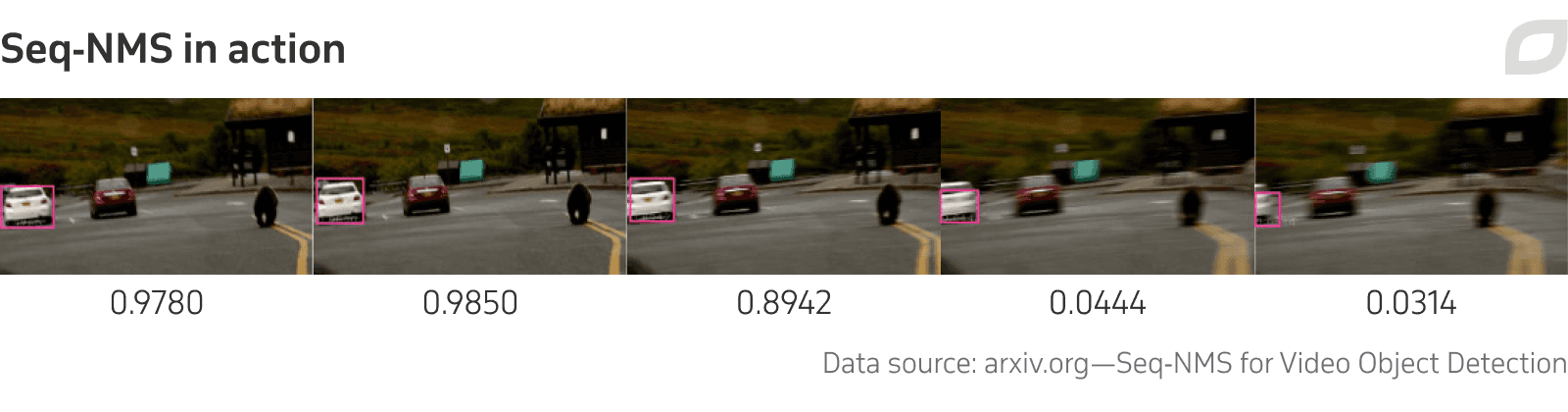

For instance, Seq-NMS (Sequence Non-Maximal Suppression), a prize-winner in the 2015 ImageNet Large Scale Visual Recognition Challenge, rated the likelihood of a reacquired object based on content from adjacent frames, analyzed from short sequences within the input video.

This approach evaluates the behavior of perceived objects rather than focusing on their detection and identification, and works best as an adjunct for other offline video-based object detection systems.

Various other post-facto video object detection systems have emerged over the last ten years, including some that have attempted to use 3D convolutional networks, which analyze many images simultaneously, but not in a way that's generally applicable for the development of real-time video object detection algorithms.

Real-time video object detection

The current industry imperative has moved towards the development of real-time detection systems, with offline frameworks effectively migrating to a 'dataset development' role for low or zero latency detection systems.

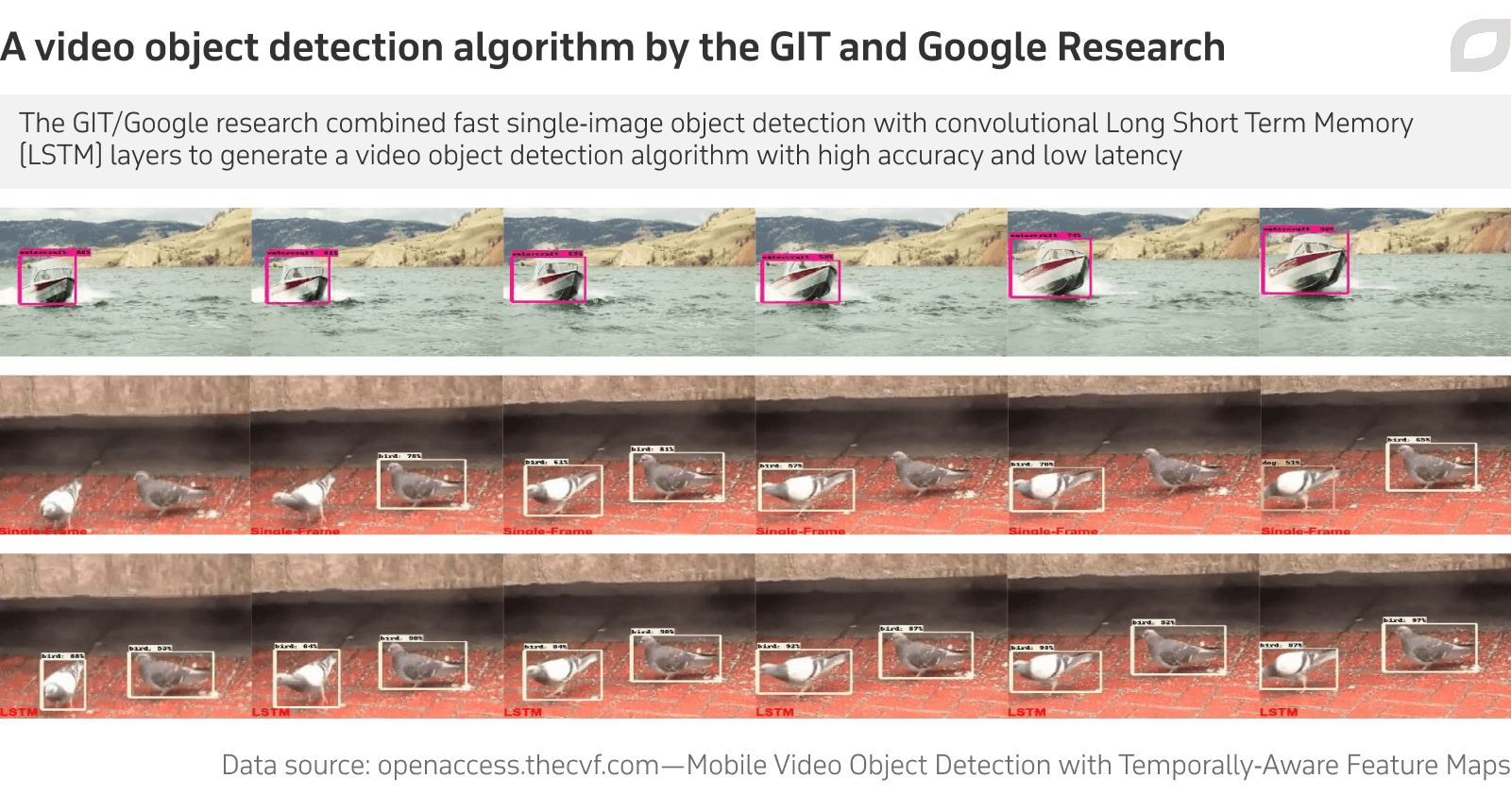

Since recurrent neural networks center on sequential data, they are well-suited to video object detection. In 2017, a collaboration between the Georgia Institute of Technology (GIT) and Google Research offered an RNN-based video object detection method that achieved an inference speed of up to 15 frames per second operating on a mobile CPU.

Have a computer vision project in mind?

Book your free consultation with Itransition

Optical flow

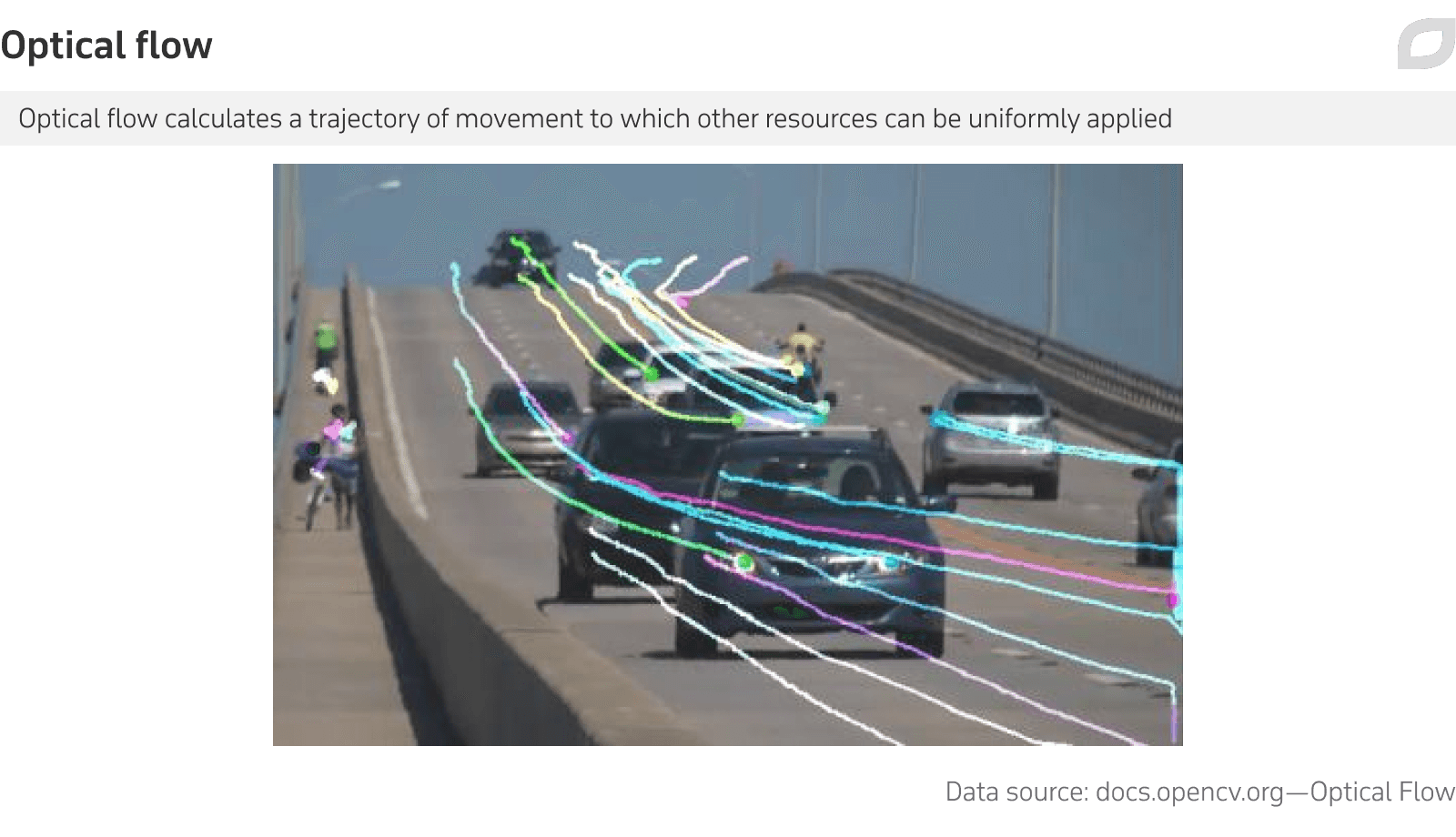

Optical flow estimation generates a 2D vector field that represents the shift of pixels between one frame and an adjacent frame (preceding or following).

Optical flow can map the progress of individual pixel groupings across a length of video footage, providing guidelines along which useful operations can be performed. It's been used for a long time in traditional high-data video environments, such as video editing suites, to provide motion vectors along which filters can be applied, and for animation purposes.

In terms of video object recognition, optical flow enables the calculation of discontinuous trajectories of objects, since it can calculate median paths in a way that's not possible with older methods such as the Lucas-Kanade approach. The latter considers constant flow only among grouped frames, and cannot form an over-reaching connection between multiple groups of action, even though these groupings may represent the same event, interrupted by factors such as occlusion and motion blur.

End-to-end optical flow evaluation is naturally only possible as a post-processing task. Results from this can then be used to develop algorithms for optical flow estimation, which can be applied to live video streams.

Many of the new crop of optical flow-based video detection algorithms use sparse feature propagation to generate continuous flow estimations, and bridge the gaps where happenstance has interrupted the action.

Additionally, new datasets relating to optical flow emerge frequently, such as the MPI-Sintel Optical Flow Dataset, which features extended sequence clips, motion blur, unfocused instances, atmospheric distortion, specular reflections, large motions, and many other challenging facets for video object detection and recognition.

Open-source video object detection frameworks and libraries

TensorFlow Object Detection API

The TensorFlow Object Detection API (TOD API) offers GPU-accelerated video object detection in the context of perhaps the most widely-diffused and popular library in the computer vision sector. It's therefore a notable contender for inclusion in a custom video object detection project.

TOD API allows direct access to useful framework libraries such as Faster R-CNN and Mask R-CNN (on COCO), and can be used as a frontend for a variety of other object detection networks, such as YOLO (see below) — though many of these will need to be implemented manually.

If your primary interest is in agile and mobile video object detection, the TensorFlow hub currently features seven native models that can be implemented without further hooks or architecture. A wider variety of models is available for desktop and server architectures.

TOD API's tutorial database includes a guided walk-through on detecting objects with a webcam, and the standard and extent of documentation is a notable advantage for this framework.

YOLO: Real-Time Object Detection

You-Only-Look-Once (YOLO) is an independently-maintained video object detection system that can operate in real time at very high frame rates — a public limit of 45fps, with reported useful frame rates of up to 155fps. YOLO was initially released in 2015 in concert with research contributions from Facebook.

Currently at version 5, YOLO uses a fully convolutional neural network (FCNN) to predict multiple bounding boxes simultaneously, instead of iterating through an image multiple times in order to gather a series of predictions, as with most of the native methods in TensorFlow and in certain other popular frameworks.

This approach offers the highest speed available in a comparable framework, though there can be a trade-off between accuracy and latency, depending on the nature and scope of the governing model being used for detection — as well as the quality of local hardware resources. YOLO is highly generalizable, and can be more receptive to 'unseen' domains than traditional RCNNs.

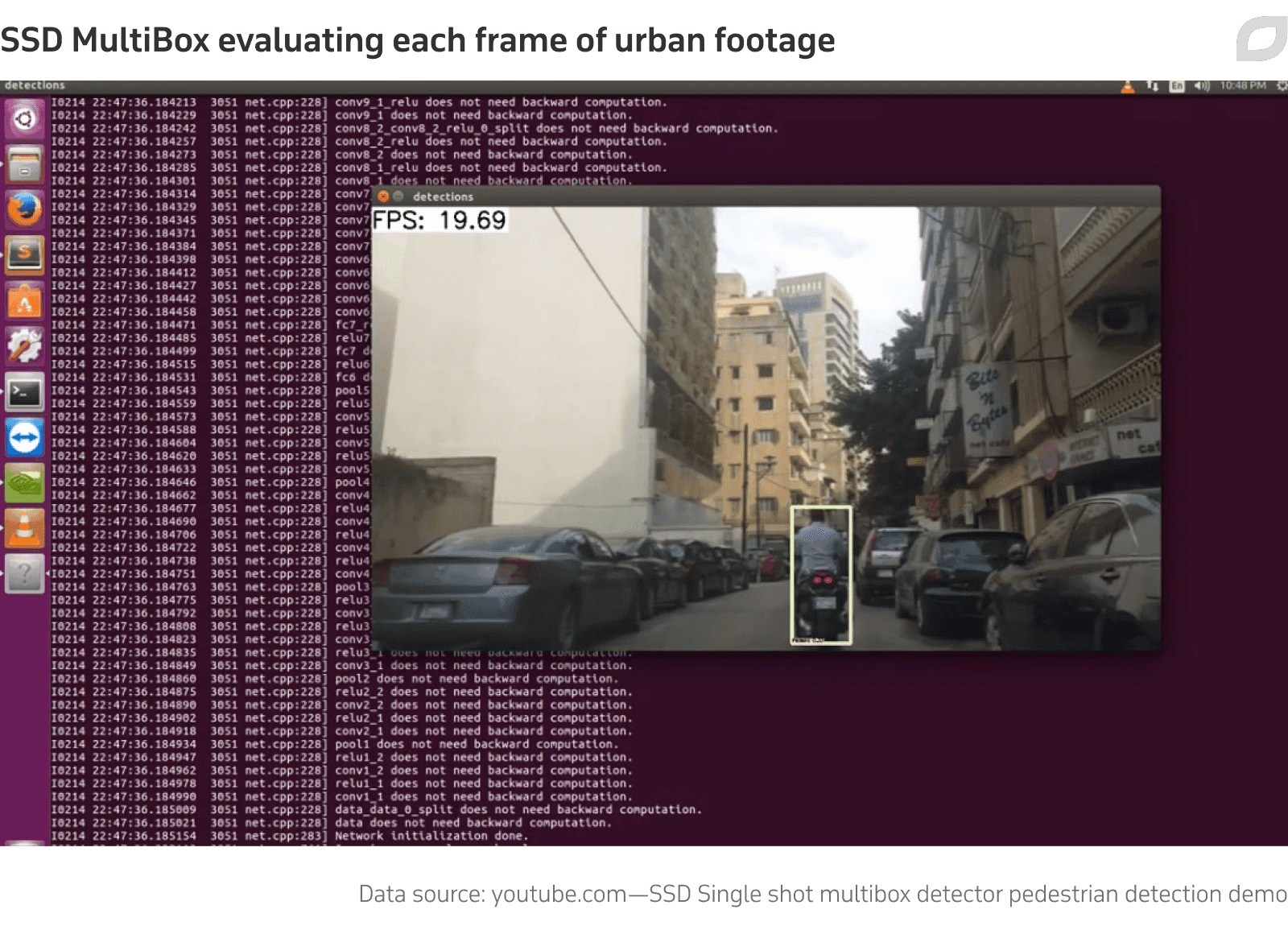

SSD MultiBox

SSD MultiBox is a Caffe-based single-shot detector (SSD) system that, like YOLO, uses a single neural network to generate a feature map, from which probability scores are calculated for objects detected within slices from a single image.

Though the system's two versions — SSD300 and SSD512 — each claim higher frame rate/accuracy scores than YOLO, it should be considered that they were not tested against a default YOLO install, and that YOLO has since fragmented into many useful iterations.

In one 2020 study comparing SSD vs. YOLO, independent researchers concluded that YOLO V3 produced superior average detection results, though a higher rate of false positives than SSD. Both were concluded to be very capable frameworks for a custom video object detection solution. Contrarily, a study the following year concluded that TensorFlow's SSD MobileNet v2 demonstrated improved accuracy and generalization over YOLO V4, though not by an overwhelming margin.

ImageAI

ImageAI is a Patreon-backed open-source machine learning library for Python that supports video object detection and analysis. It can operate as a host for multiple relevant libraries and other frameworks, including RetinaNet, YOLO V3 and TinyYOLO V3, trained on the ImageNet-1000 dataset.

The library switched to a PyTorch backend in June of 2021. It offers extensive documentation but lacks the mature ecostructure and extensibility of the sector leaders listed above.

Its intended simplicity of use and limited configurability may represent a hindrance to a more complex set of project needs, or else a welcome plug-and-play solution for frameworks with wider scope than image detection — particularly for projects centering on object classes featured in common and popular datasets.

TorchVision

TorchVision offers computer vision functionality for the Facebook-led PyTorch project. Out of the box, it has direct support for thirty of the most popular datasets, including COCO, CelebA, Cityscapes, ImageNet, and KITTI. These can be called as native subclasses.

Natively GPU-accelerated via PyTorch, TorchVision also features a number of pre-trained models, with 3D ResNet-18 specifically addressing video detection tasks.

TorchVision may be a little overtooled for a custom project where latency and a frugal resource load are key factors. The ease with which it can get a project up and running can be off-set later by framework overhead. Other leading FOSS detection resources described above are generally leaner and more agile, depending on configuration and allotted resources.

Nonetheless, TorchVision benefits directly from PyTorch's considerable scalability, with a mature feature set for video object detection.

At the current time, nearly 500 open-source object detection models are estimated to be available, though not all deal with video and many of them intersect with the popular frameworks listed above, or else utilize them in custom host environments.

Team up with Itransition to create your computer vision software

Computer vision software

We deliver custom solutions for video and image analysis as well as face and optical character recogtion for companies from healthcare, retail, manufacturing, and other industries.

Commercial APIs for object recognition and detection

As we observed in a recent article on OCR algorithms, high-end commercial APIs can be useful as proving grounds for an object detection workflow. Their primary advantages are a fast start, reliability of uptime, and rich feature sets; negatively, they're often costlier at scale than a well-designed custom-built API, while possible future restrictions — and price rises — are difficult to anticipate.

Google Video Intelligence API

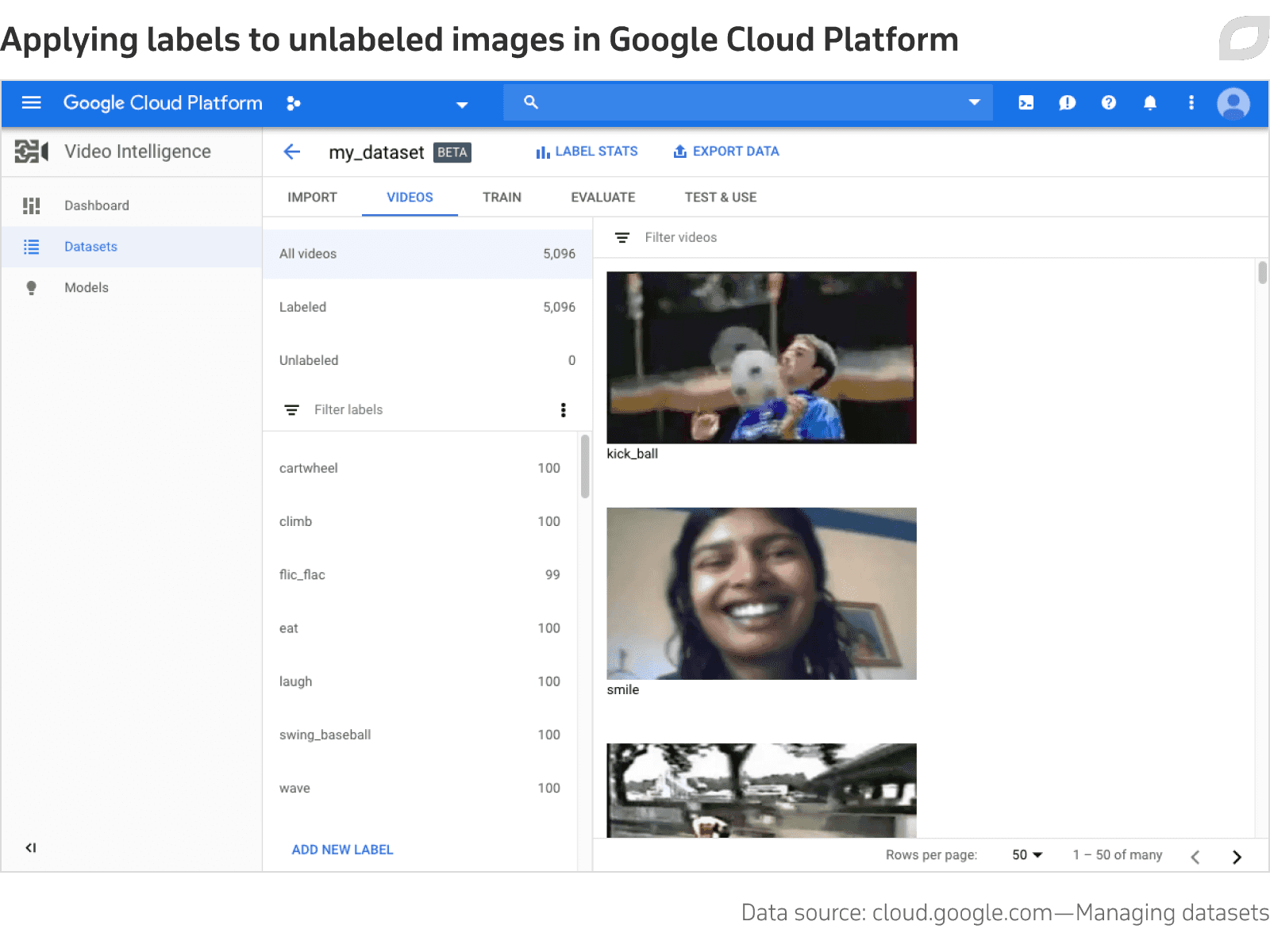

As a leading FAANG investor in computer vision research, Google's Video Intelligence framework offers a wide gamut of production-ready features, including object recognition in video, live OCR capabilities, logo detection, explicit content detection, and person detection. Cloud Video Intelligence comes with a formidable offering of pre-trained models, though custom training is available.

CVI pricing structure, while fully stated, is nonetheless complex and labyrinthine, and a study in its own right. A calculator is provided to help estimate costs.

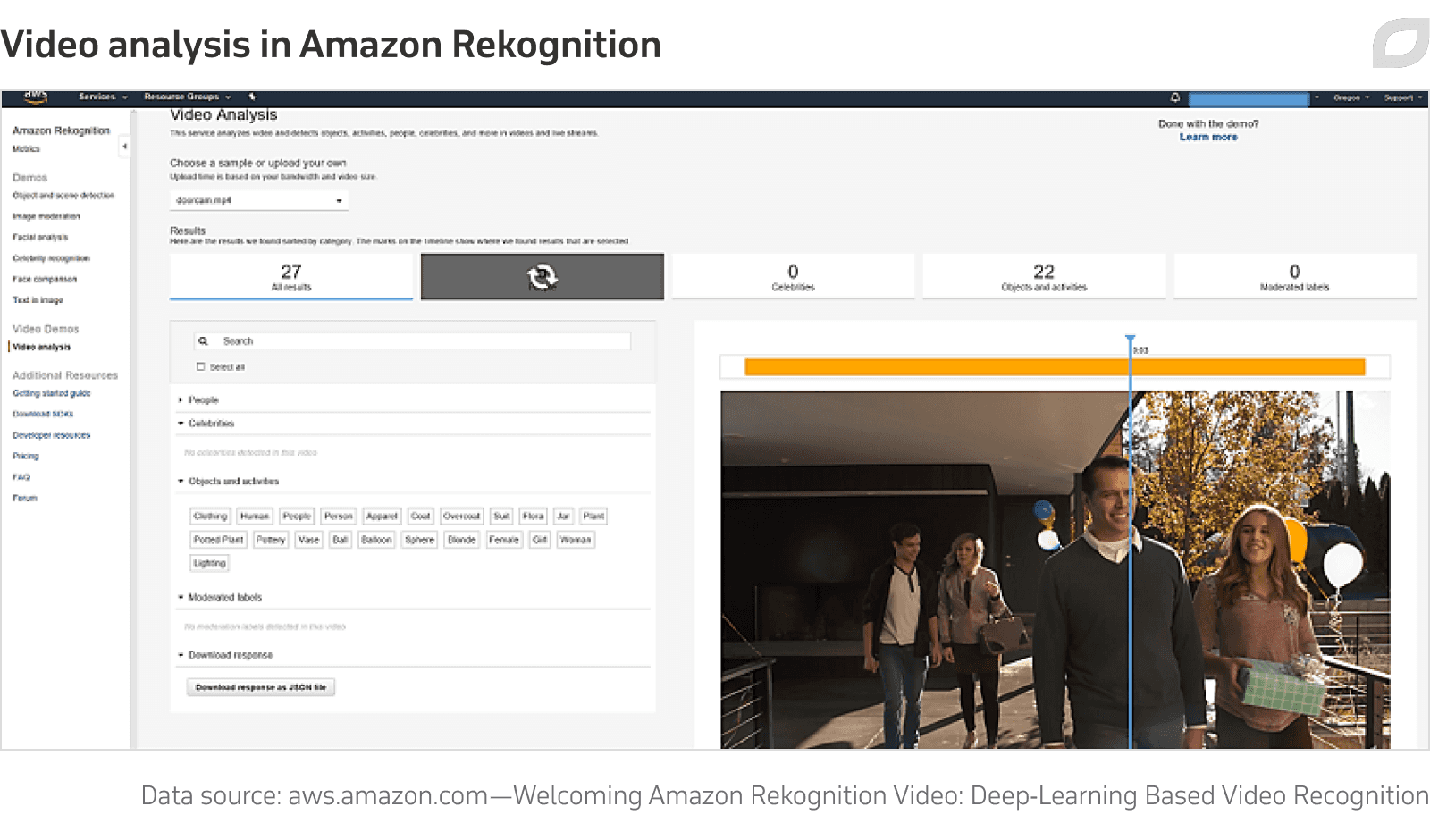

Amazon Rekognition

Launched in 2017, Amazon's video analysis offering is broadly comparable to CVI, with a hugely extensible ecostructure that includes a wide variety of pre-trained models, and facilities for bespoke model training.

New features are regularly added to Rekognition's object detection capabilities, such as PPE detection, and Rekognition can also scan video for specific sequence content, such as end credits in a movie, bar codes, and can provide chapter segmentation.

Reckognition's pricing for video analysis has a separate tier for facial metadata storage, but is otherwise divided into a number of similarly priced categories, several of which reflect Amazon's special interest in ecommerce, such as label recognition and customer tracking.

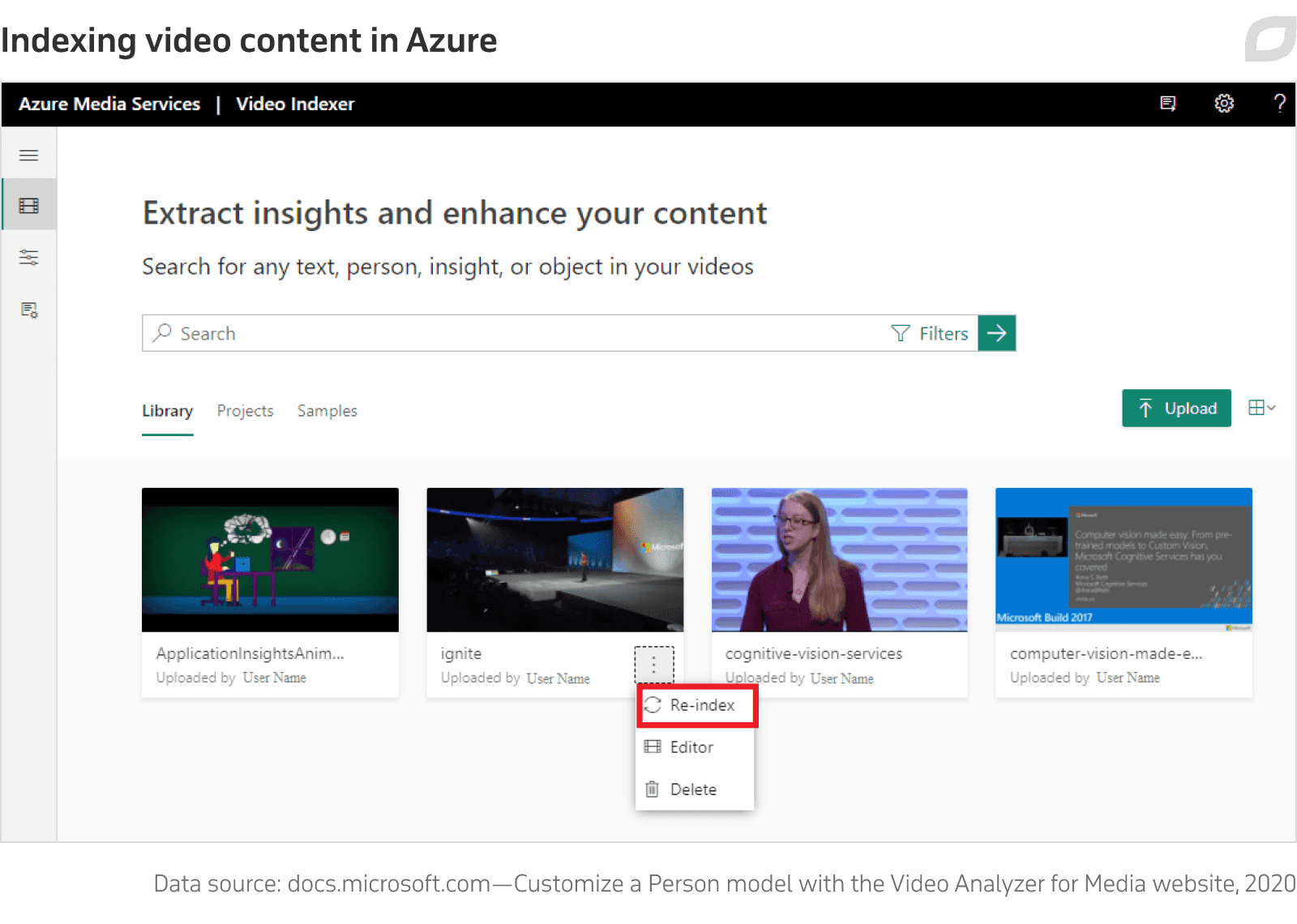

Microsoft Image Processing API

Microsoft 's video processing offering is quite buried in the far wider range of Azure subscription services, but offers a number of easily deployable video object detection algorithms. Object detection models can be created via Azure's Custom Vision training and prediction resources or the wider scope of Cognitive Services.

Microsoft Computer Vision pricing is perhaps the most difficult to estimate out of these three FAANG leaders in video object detection.

Other commercial video object detection APIs include:

- Clarifai: Delaware-based Clarifai offers an image recognition API with 14 pre-trained models, specializing in fashion identification and food, as well as common objects, explicit content, celebrities and faces.

- Valossa Video Recognition API: Valossa offers a wide range of detection types in its Video Recognition API, including live face analysis, explicit content monitoring, and scene indexing. However, its core focus is on on-premises video surveillance solutions.

- Ximilar Visual Automation: Ximilar is a business-focused video object detection API with a clear emphasis on fashion retail applications. Though custom models are possible, Ximilar positions itself as a one-stop-shop for a limited range of object domains.